Artificial Neural Networks

-

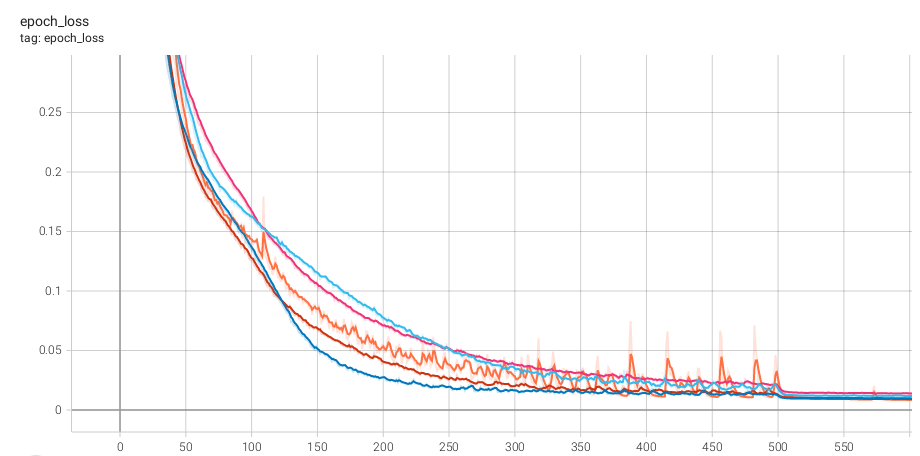

In my previous post about ThoughtNet, an attention-based neural architecture for variable-compute inference, I highlighted two limitations that I encountered with it: While trying to solve the second problem, I stumbled across a surprising way to stabilize training convergence as well. Was ThoughtNet Cheating? While attempting to understand why my ThoughtNet models weren’t generalizing much…

-

The majority of today’s artificial neural network (ANN) architectures perform a constant amount of computation at inference time regardless of their inputs. This includes all recent GPT-style LLMs1 and other transformer-based architectures. Whether you ask an LLM to complete the series “1, 2, 3, …”, or you ask it to solve a complicated logic riddle,…